Science is Broken

Formulating hypotheses and designing experiments to confirm or falsify them is too slow, too unreliable, and far too path-dependent. Despite ever-increasing investment, progress is slowing. Most papers are wrong. Ideas are getting harder to find.

We suspect that, in part, this is due to cognitive biases implicit in the established scientific method – resulting in the general scientific endeavour exploring ever more path-dependent slices of the space of potential discoveries.

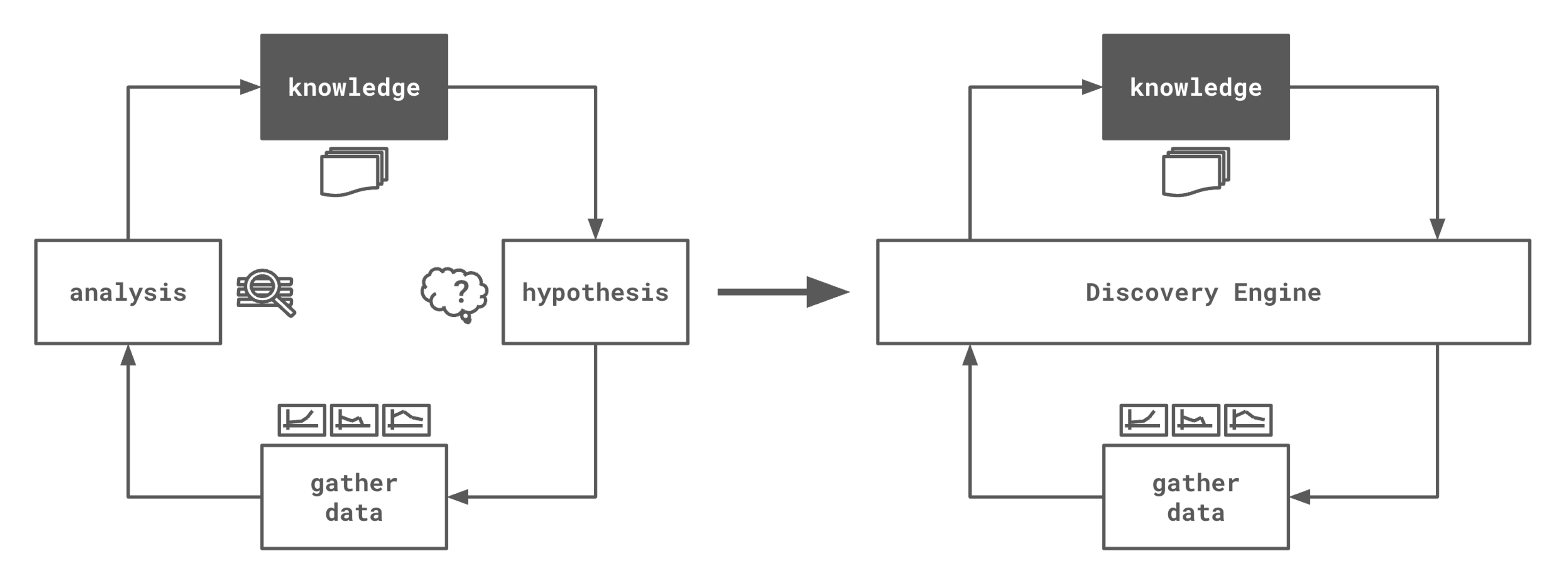

The Status Quo

Hypothesis → Experiment → Analysis → Publication?

Hypothesising

We rely on humans to ask the right questions. This was the best we could do 400 years ago, and may still be the best we can do in domains where data remains difficult (or expensive) to gather. But for many fields, data is increasingly abundant – and it seems deeply wasteful to limit the scope of potential discoveries to the necessarily path-dependent (whether based on personal experience or prior literature) hypotheses a human can come up with.

Some humans are better at this than others – but still, we’re accessing only a tiny fraction of the potential idea space. Worse, that tiny fraction may well be barren and waste everyone’s time, since the existing publications that guide this kind of hypothesis generation very often do not replicate!

(Note: LLMs inherit these problems – and likely amplify them. Hallucinations in esoteric topics are extremely difficult to detect. A convincing lie is worse than an obvious one.)

Experiments and Analysis

Confirmation bias is real. We typically design experiments to test for only a few things – explicitly looking to confirm or deny our hypotheses. This biases the levers we choose to pull and the variables we choose to measure. And then it biases the analysis too. This further limits the potential discoveries we can make to the tiny slice of the world defined by our hypothesis. The whole cycle is impoverished.

Misaligned Incentives

Everyone in academia mourns the publish or perish paradigm we’ve gotten stuck in, and the downstream p-hacking, selective publishing, and chilling effects it creates. Since this problem is outside our scope (for now), we’ll not discuss it further – but let’s acknowledge it’s an important part of the puzzle.

Let the data speak!

So what’s the solution? We think it’s data-driven discovery. Something like a new scientific method.

Scientists of the world: instead of hypothesising and selectively gathering data to confirm or deny your hypotheses, we invite you to begin gathering as much data as you possibly can about the phenomena of interest – indiscriminately. Measure everything you can think of. Every single thing you can afford to. Throw in existing datasets. Scrape the web. Beg your friends. Big data.

“But Leap,” the crowd roars – “how could we ever make sense of this huge blob of data, if we don’t have a hypothesis to guide us?! How will we know where to look, and what to look for?”

Never fear, dear readers. Because now we’ve got superhuman pattern recognition engines at our fingertips, you don’t need to make sense of the data yourself.

Machine learning can do it for you. (This wasn’t possible before the advent of big data and hefty compute to go with it – but now? Now’s the time.)

Machine learning – especially deep learning – can find complex non-linear patterns in arbitrary data. The problem is that up until now, we’ve lacked the technology to understand what these models have learned from the data. Black-box models certainly have their place in science (see: AlphaFold!), but they’re of limited use for open-ended discovery.

Deep learning interpretability changes this – and interpretability is the core of our research at Leap. We design algorithms that make it possible for humans to understand the patterns that these models have learned – and by doing so, unlock their potential to teach us something new.

And that’s exactly what our Discovery Engine does: it uses deep learning to systematically find patterns in datasets of arbitrary size and complexity, and applies our state-of-the-art interpretability to render them human-parseable.

Some of these patterns are just noise, of course. And many are already known. But often, we also find patterns that are not only novel, but that make sense when contextualised by established science.

And, because the whole thing is automated, it’s hundreds of times faster than existing methodologies – and completely reproducible.

This is a general-purpose technology that will fundamentally change how science is done.

How does it work?

Our key insight is that machine learning models – particularly deep neural networks – often find patterns in data that humans miss. This can lead to superhuman performance, where models are able to predict things that humans just don’t know how to. And if a model can do something you can’t, it knows something you don’t.

That’s what we extract. With our state-of-the-art AI interpretability, we’re able to find out what the model has learned, and render those patterns human-readable. We do all of this automatically – from raw data to final report.

This is the real AI revolution: discovery.

How is it different from...

1. Data analytics?

Existing data analytics platforms require data science expertise to use and interpret. They’re directed by human assumptions (biases) about where interesting patterns might lie, and are mostly used to confirm hypotheses. This is because exploratory data analysis often assumes linear relationships, and even with the fanciest visualisations, struggles to capture complex, non-linear interactions.

For example:

Say we have features A, B, and C, and we want to understand their relationship with D. Some basic statistical analysis might tell us: A correlates moderately with D, B correlates negatively, and C has no correlation. But what if high values of A predict high D only when C is within a certain range? Unless we brute-force every possible combination (which is intractable for real-world datasets), or already know where to look, we’ll completely miss that kind of pattern.

This is where our Discovery Engine excels. It captures those kinds of non-obvious patterns automatically.

2. LLMs for science?

LLMs trained on papers are often worse than useless: they generate text that looks plausible, based on a literature that may be fundamentally flawed, or outright wrong. Testing their outputs is expensive. Context length is limited. And disentangling preconception from novel insight is, to put it mildly, challenging.

That said, LLMs are excellent at contextualising results once you have them. We use them to connect discovered patterns back to existing scientific knowledge.

In the future, we imagine scientific agents powered by LLMs using the Discovery Engine just as human scientists do now.

3. AI for specific scientific domains?

Domain-specific AI systems – e.g. for drug discovery – are extremely useful. But they tend to bake in lots of assumptions, which can limit discovery. They help you make predictions, but not always understand why. And of course, a domain-specific model can’t be used outside of its domain.

Our Discovery Engine is domain-agnostic. It works on any data, from any field – and crucially, it doesn’t just make predictions. It shows us the underlying patterns that make those predictions possible.

Limitations

Right now, we handle tabular data completely automatically. We’re working on time-series. We have internal prototypes for image data, and some early experiments with text.

We’re actively expanding modality support – and because the Discovery Engine is largely model-agnostic under the hood, it's inherently extensible. Any modality (or combination of them) is fair game.

But of course, there are caveats.

+ Not all patterns are real. Some will be artefacts. Some will be noise. We work hard to filter these out – but like all scientists, we must remain cautious.

+ Extrapolations are just that. We largely report patterns that can be backed up by subsets of the data, but also those that are extrapolated from the data, by the model. These latter kind should be treated with caution – they can sometimes uncover really interesting relationships – but they’re tentative hypotheses, not conclusions. When we report these patterns, we flag them clearly.

+ Garbage in, garbage out. If your dataset is deeply flawed, biased, or broken – we can’t magically fix that. (Though we do a lot of cleaning along the way.) The onus remains on the scientist to gather large, high quality datasets to maximise the novelty and robustness of the Discovery Engine’s output.

+ Human oversight matters. Despite the automation, human judgment is essential – it’s still up to us to decide what’s interesting, how much and what kind of data to gather, and what to do with these insights.

Science is hard, and we’re not promising a silver bullet. But we think it’s time to lean into the age of data, and update our methods so we can use it to drive human progress.

Digging Deeper

For tabular data (and soon other modalities), the Discovery Engine is brilliantly automatic – throw in fairly messy data and it’ll be cleaned, modelled, interpreted, and results returned, no tuning required. Here’s what happens under the hood:

+ Data Ingestion: Scaling, encoding, imputation, deduplication, de-correlation – the works. We use heuristics to select appropriate steps based on the shape of the data.

+ AutoML: We built our own system from scratch. Most AutoML tools aren’t designed for interpretability or pattern extraction – we needed one that was. Ours dynamically selects architectures and avoids overfitting, so that any patterns we extract are truly learned from the data, not inherited from pre-training.

+ AutoInterp: This is where the magic happens. Our interpretability stack pulls out the patterns learned by the model. We distinguish between patterns that are well-supported (demonstrable in the data) and those that extrapolate. The former go in the “discovery” bucket; the latter go in “speculative hypothesis.” Both are valuable, but we flag them accordingly.

+ Report Generation: Language models help us contextualise the patterns – tying them to the existing literature, assessing novelty, identifying implications. We also analyse the dataset itself to suggest what more data could be helpful (e.g. "collect more samples where feature X1 = A and X2 ≤ 42, because we suspect this will be highly predictive of Y").

What’s next?

We first used small synthetic datasets to help us benchmark our process against ground truth patterns as we prototyped the Discovery Engine. This worked! Then we used open source data from existing publications, to see if we could replicate known patterns from literature – this also worked! Now we’re just beginning to use real world data to find novel patterns. We've partnered with researchers in academia and industry to access real, messy, new data that isn’t publicly available – and we have already made novel discoveries, with publications in progress.

We’re excited to hear from scientists with interesting data of any kind.